💡 If you’re just getting started, you’ll have to rely on your best judgment a little more than experience and data. That’s perfectly fine! You might get it wrong a few times while you’re dialing in, but even a rough estimate is better than going blind. With each test, you can refine your sense of what’s realistic and lean more on data from previous tests..

A practical example:

Let’s say you’re testing a new headline on your pricing page.

Your current conversion rate is 4%. You decide the smallest lift you care about is 25%, in other words, you want to detect an increase from 4% to 5%.

You plug this into a sample size calculator with a 5% significance level and 80% power, and it tells you that you’ll need around 6,500 users per variant, about 13,000 total.

If your page gets 1,500 visitors a day, you’ll need to run the test for 9 days to hit that sample size. Simple math, but crucial.

Now, what if you said, “I’d be happy with just a 0.1% improvement”? That’s technically measurable but not practically useful. You’d need hundreds of thousands of users to prove it wasn’t a fluke. And even if it was real, would that tiny bump be worth the dev hours and planning time?

That’s the key: don’t just ask “Can I detect a change?” Ask, “Is the change worth chasing?”

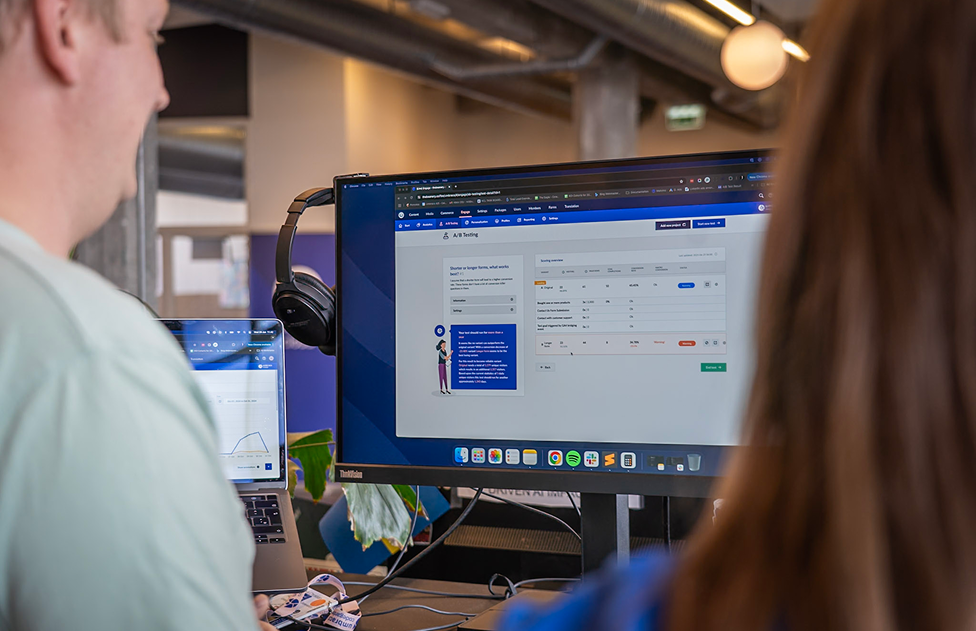

💡 If you already have data available in Umbraco Engage, the tool will help you determine this.

You can also use a sample size calculator, like the one here, to determine if your sample size is enough. We have built an AI A/B-test assistant for ChatGPT that can help you run all the numbers and get assistance on it if you prefer that.