What is A/B testing? How to run experiments that drive results

Why guessing isn’t a strategy

In today’s digital marketing world, intuition is nice - but data wins.

If you’ve ever debated which version of a headline to use, wondered whether a different CTA button would convert better, or tried to justify a design change to your team with “it just feels right”, you’re in the right place.

A/B testing replaces the guesswork with evidence. It gives you the confidence to make changes knowing they’ll improve performance — not just hoping they will.

This guide is for:

- Marketers who want to get more value out of their website.

- Teams looking to scale their testing without endless dev cycles.

- Curious content folks wondering if small changes really can move the needle (spoiler alert: they can).

We’ll break it all down — from what A/B testing actually is and how to run your first test, to how Umbraco Engage helps you test content natively, right inside your Content Management System (CMS). No plugins, no clunky integrations, and no spreadsheets (unless you like spreadsheets, in which case… we’re not judging).

Let’s get started.

Umbraco Engage isn’t just built for marketers — it’s built to perform.

Whether you're just starting or scaling your experimentation strategy, these badges reflect what our users already know: Engage delivers fast, measurable value.

Explore Umbraco and A/B testing

Do you want to see it for yourself? Try out our interactive product tour ofA/B testing in Umbraco Engage

What is A/B testing and why it matters

At its core, A/B testing is a way to compare two versions of something — a webpage, a button, a headline — to see which one performs better. You show Version A to half of your audience, Version B to the other half, and then measure which one drives more conversions, clicks, signups, or whatever metric matters to you.

Simple concept. Big impact.

Why it works

A/B testing strips away assumptions. Instead of “I think this CTA will perform better,” you get to know — backed by real user behavior, not opinions or internal debates.

For example:

- You’re launching a new landing page. Do people respond better to “Start Free” or “Try It Now”?

- Your blog CTA isn’t converting. Should it be at the top of the article or the end?

- Your product detail page is underperforming. Will a cleaner layout reduce friction?

These aren’t gut-feeling decisions anymore. They’re testable hypotheses, and A/B testing turns your website into a living experiment.

Real-world A/B testing example

Imagine you're running an online store and want to promote a new product category, let’s say wireless headphones.

In Version A of your homepage, you feature them in a clean hero banner with a bold CTA: Shop Now. In Version B, you go all in with a video banner showing people using the headphones in real life.

Both look good. Your designer has a favorite. Your CMO has an opinion. But your users? They get to decide.

You launch both versions and let the data roll in. After a few days, one version clearly leads to more product views and checkouts.

Congratulations, you just ran an A/B test. And more importantly, you made a smart decision rooted in what actually works.

Why it matters now more than ever

With users expecting lightning-fast, relevant, personalized experiences, websites can’t afford to stand still. Iteration is everything. A/B testing helps you:

- Increase conversions without needing more traffic

- Learn what actually matters to your users

- Build internal confidence in data-driven decisions

Whether you're optimizing a homepage, improving a checkout flow, or fine-tuning a blog CTA, A/B testing gives you the tools to do it systematically — and successfully.

The mechanics of a good A/B test

So you’re ready to test. But before you dive in and start swapping buttons or headlines, let’s walk through what separates good A/B testing from “we changed something and hoped for the best.”

A great test starts with a plan — and that plan starts with a hypothesis.

1. Start with a clear hypothesis

Every test should begin with a simple, focused statement of what you believe will happen and why.

Example:

"If we move the CTA button higher on the page, more users will see it and click it, resulting in more signups."

That’s a testable, measurable, and purposeful hypothesis. You’re not just making a change for the sake of it — you’re validating a specific theory about user behavior.

2. Focus on a single variable

It’s tempting to test everything at once: new headline, new image, new layout, new font — go big or go home, right?

Not quite.

If you change five things and conversions improve (or tank), you won’t know which change made the difference. By testing one variable at a time, you get clarity — not chaos.

What you can test:

- Headlines or body copy

- Button text or color

- Page layout

- Images or icons

- Placement of elements (e.g. signup form, CTA)

- Navigation structure

3. Understand statistical basics (No PhD required)

You don’t need to be a data scientist to run a valid A/B test — but a few basics go a long way.

-

Sample size matters: You need enough users to detect a meaningful difference. If only 30 people saw your test, it’s too early to call a winner.

-

Run it long enough: Let the test run through traffic cycles (weekdays/weekends) to avoid false positives.

-

Watch for significance: Most tools use p-values to tell you when results are statistically significant — usually when there’s a 95% confidence level that the result isn’t due to chance.

4. Avoid these common pitfalls

Even experienced teams fall into a few classic traps:

-

Testing without a goal: If you don’t know what success looks like, you won’t know when you’ve won.

-

Stopping too soon: Don’t declare a winner after one day of testing (unless you’ve got millions of users). Let it reach the required sample size.

-

Letting tests run too long: Don’t stretch a test just to chase significance — it can skew results and block other tests that can drive results.

-

Overcomplicating things: Start simple. One variable, one goal, one insight.

Good news: Umbraco Engage handles the math for you, including significance scoring and goal tracking through the built in analytics. On top of that, we have even built a Custom GPT that can help you out with determining these things by asking you the questions it needs to be able to calculate them. We call it CROBot. It’s a ChatGPT assistant for A/B testing. You can try it in ChatGPT - for free and with no signup required.

How to run an A/B test on your website

Ready to go from theory to practice? Here's your step-by-step roadmap to running a solid A/B test, whether you're optimizing a headline, a form, or an entire page flow.

1. Set a clear goal

Every test starts with a question: What do we want users to do?

That could be:

- Clicking a CTA button

- Completing a form

- Signing up for a newsletter

- Reaching a confirmation page

Choose one goal per test — the thing you want to improve, and make sure it's measurable. If you’re using Umbraco Engage, you can define both macro and micro goals directly in the Analytics settings.

2. Choose what to test

Now pick your variable. Keep it simple — one element at a time:

- Headline text

- Button label

- Hero image

- Form layout

- Navigation order

If you’re just starting out, choose something high-impact that a lot of users interact with. Big visibility = faster insights.

3. Define control vs. variant

-

Control (A): Your current version — the baseline.

-

Variant (B): The new version you want to test.

Only change the thing you're testing. Everything else should stay exactly the same to keep the results clean.

4. Split your traffic

A/B testing works by randomly splitting your audience. Half the visitors see Version A, the other half see Version B. Most platforms (including Umbraco Engage) do this automatically.

You don’t need to overthink this — just make sure your audience is large enough to get meaningful results. If you’re working with low traffic, consider testing site-wide elements or running the test for a longer period.

5. Run the test and measure results

Let your test run until you’ve hit a statistically valid sample size — ideally over a full traffic cycle (weekdays + weekends).

Then review:

-

Which version converted better?

-

Was the difference significant or just a blip?

-

What can we learn, even if the result was a tie?

If you're using Umbraco Engage, you’ll get clear variant performance data and can easily declare a winner from right inside the CMS.

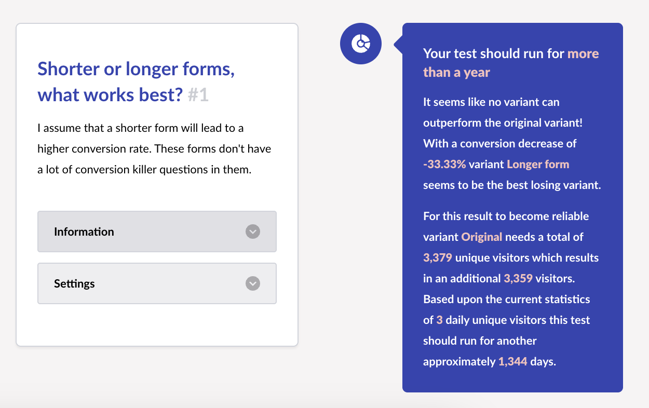

Here’s a tip. If Umbraco Engage already has data available on your goal, it can tell you how long you should expect your test to run for. If not, you can use CROBot to help you calculate it based on a few variables.

Explore Umbraco and A/B testing

Do you want to see it for yourself? Try out our interactive product tour ofA/B testing in Umbraco Engage

Choosing the right A/B testing tool

There’s no shortage of A/B testing platforms out there — from enterprise-grade solutions with endless customization to browser plugins that let you tweak headlines on the fly.

But the real question isn’t: What’s the most powerful tool?

It’s: What’s the right tool for the way your team works?

What to look for in a testing tool

Here’s what actually matters when choosing your A/B testing setup:

-

Ease of use: Can marketers and editors set up tests without waiting on developers?

-

Integration with your stack: Does it work with your CMS, analytics, and goal tracking?

-

Speed to insight: How quickly can you go from idea → test → result?

-

Support for your test types: Does it allow simple content swaps and more advanced page-level testing?

-

Clarity in results: Are the insights easy to understand, share, and act on?

The goal isn’t to overwhelm your team with options. It’s to give them a workflow that encourages more testing, not more meetings.

Standalone tools vs. native testing

Many companies turn to powerful external platforms for A/B testing — and they can work well, especially if you have a full dev and analytics team on standby.

But they often come with trade-offs:

-

Separate environments to manage

-

Long setup times

-

Fragmented data (especially around goals and CMS content)

-

Added cost and complexity

That’s where CMS-native testing tools stand out — and why we built A/B testing directly into Umbraco Engage. Curious to see what A/B testing looks like in Umbraco Engage? Take our product tour of the A/B testing features right here

Why should I have an A/B testing platform built into my CMS?

Testing inside your CMS brings a few serious advantages:

-

No code required (but devs can extend it if needed)

-

Use your existing content structure — no cloning pages or managing duplicates

-

Track goals directly in the system — macro and micro

-

Visualize variant performance without switching tools

It means you can go from “we should test this” to actually running the test — inside Umbraco Engage — in minutes, not days.

Advanced A/B testing tips

Once you've run a few solid A/B tests, it’s easy to get hooked — and for good reason. But beyond button colors and headline tweaks, there's a whole world of smarter, deeper experimentation.

Here’s how to take your testing strategy to the next level:

Try multivariate testing (when it makes sense)

If A/B testing is about testing one thing at a time, multivariate testing (MVT) lets you test combinations of changes. Think headlines + images + CTAs, all in a single experiment.

Great for:

- High-traffic pages

- Complex layouts

- Teams with strong analytics chops

But it needs a lot more data. If your traffic is modest, stick to classic A/B tests for clearer insights.

Don’t sleep on micro-conversions

Not every test needs to lead to a sale or signup. Sometimes, progress toward a goal is just as valuable.

Examples of micro-conversions:

- Time on page

- Clicks on internal links

- Form interactions

- Scroll depth

Tracking these gives you more opportunities to learn, especially on pages that sit earlier in the user journey.

Segment your results

One variant might crush it on mobile, but flop on desktop. Or work well for new visitors, but not for returning ones.

Segment your test data by:

- Device

- Traffic source

- User type (new vs. returning)

With Umbraco Engage, you can pair A/B testing with personalization to fine-tune experiences for different audience segments, a powerful one-two punch.

Low traffic? Go bigger or go broader

Not every site gets 50,000 visits a week, and that’s okay. If you’re working with limited traffic, try:

-

Site-wide tests (e.g. navigation changes, CTA patterns)

-

Longer durations to allow more users to enter the test

-

Directional insights rather than hard statistical wins

-

Consider lowering the statistical significance from 95 to 90.

Remember: imperfect data is still better than no data. Just don’t over-interpret tiny results.

Use heatmaps and analytics to pre-test ideas

Before running a test, see where users click, scroll, or get stuck. Heatmaps, session recordings, and scroll-depth reports can reveal where friction lives.

This helps you test smarter, not just more.

Explore Umbraco and A/B testing

Do you want to see it for yourself? Try out our interactive product tour ofA/B testing in Umbraco Engage

What to do with your A/B test results?

You’ve run the test, watched the traffic roll in, and now you’re staring at a graph. One variant performed better… but what does that actually mean?

Let’s break it down.

Interpret the outcome — even if it’s not a win

Not every test ends with a clear winner, and that’s okay.

Here’s how to think about the three most common outcomes:

-

Winner: The variant outperforms the control with statistical significance. You’ve got a data-backed improvement — time to implement it.

-

Inconclusive: Both versions perform about the same. You didn’t lose — you just learned that this change didn’t make an impact. On to the next idea.

-

Loser: The control wins. Great — now you know what not to do, and you saved your users from a potential dip in performance.

Even when a test “fails,” the insight is valuable. You’ve narrowed the field and made room for better ideas.

Know what’s significant vs. what’s just noise

Just because Variant B had a slightly higher conversion rate doesn’t mean it’s the winner — unless that difference is statistically significant.

Most tools (like Umbraco Engage) calculate this for you, but keep this in mind:

-

Statistical significance = you’re confident the result wasn’t due to chance.

-

Directional insight = a promising pattern, but not definitive.

Both are useful — just don’t treat a directional win like a guaranteed game-changer.

And here’s another tip. Test your old results in 12 months and see what happens - things might have changed.

Document your learnings

One of the biggest missed opportunities in A/B testing? Not writing things down.

Every test should be logged:

- What you tested

- Your hypothesis

- The outcome (win/loss/inconclusive)

- What you’ll try next

Over time, this becomes an internal goldmine — a playbook of what works for your audience, your product, your brand. There are multiple ways this can be done.

We use Airtable to help us keep track of our backlog of ideas, the progress and our results. But you can start out with a good old Excel or Google sheet or Google Docs. Just keep it structured and accessible.

You are also welcome to borrow our old A/B test hypothesis template Make a copy and save it to your own drive. Acces the A/B test hypothesis template

Turn testing into a habit

Great testing isn’t about one big win. It’s about continuous improvement.

Make it part of your team’s rhythm:

- One test per sprint or content cycle

- Regular test planning meetings

- Shared backlog of ideas tied to user goals

When testing becomes second nature, so does growth.

Common A/B testing mistakes (and how to avoid them)

Even smart teams slip up when it comes to testing. Here are the most common traps — and how to sidestep them before they waste your time (or worse, your data).

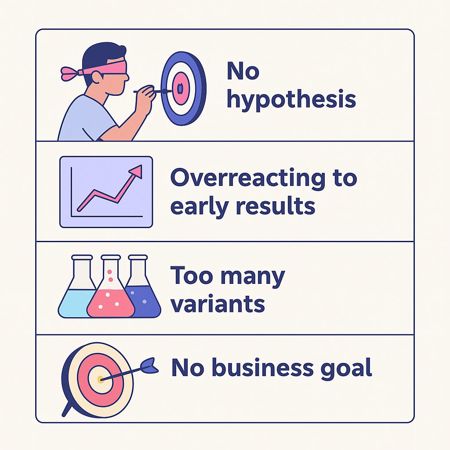

Testing without a hypothesis

“If we change it… Maybe it’ll work?”

That’s not a test — that’s a shot in the dark. Every experiment needs a reason behind it. Without a hypothesis, you’re not learning anything, even if you see a change in results.

How to fix it: Always define what you’re testing and why you think it will perform better. If in doubt, you can work with our CROBot in ChatGPT to help you define your hypothesis, or use our old A/B test hypothesis template here.

Overreacting to early results

You launched the test yesterday, and Variant B is up 35%. Time to call it, right? We have also been guilty as charged here.

But not so fast. You can easily get carried away.

Traffic ebbs and flows. Early results are often misleading, especially with small sample sizes.

Fix it: Let tests run through a full traffic cycle and ensure you have calculated the required sample sizes before you start the test. Be patient. Trust the process. You can use CROBot to calculate both the time and the sample size you need. Umbraco Engage also guides you.

Testing too many variants

More versions = more insights, right? Not exactly.

Each extra variant spreads your traffic thinner and increases the time needed to reach significance.

Fix it: Stick to one variant when possible. Only test multiple versions if you’ve got the traffic to support it.

Not aligning tests with business goals

Testing a new button color might be fun, but does it move the needle, and does it fit your brand colors?

Every test should map back to a real business objective — conversion, engagement, retention, etc.

Fix it: Tie each experiment to a clear, measurable goal that matters to your team.

Running tests “just to test”

If your test ends with, “Well, at least we tested something,” you’ve already lost.

Random experiments waste time and erode confidence in the process.

Fix it: Build a backlog of meaningful, user-informed test ideas. Use heatmaps, user feedback, or analytics to guide your next move. If you don’t know where to start, let CROBot help you out. We have already provided it with a lot of test ideas and results that it can help you with. Provide a screenshot or a link to your page and let it help you out.

(Bonus) Don’t let testing replace thinking

Testing is a tool — not a substitute for thinking.

If your pricing page is underperforming, testing three button labels won’t fix a fundamental UX problem.

Fix it: Use A/B testing to validate smart ideas, not avoid strategic decisions.

Explore Umbraco and A/B testing

Do you want to see it for yourself? Try out our interactive product tour ofA/B testing in Umbraco Engage

Start testing, start growing

A/B testing isn’t just for massive marketing teams or conversion specialists with calculators. It’s for anyone who wants to make smarter decisions, backed by real user behavior — not gut feeling or design debates.

Start simple. Test a headline. Rewrite a CTA. Rethink your form layout. The key is to start — and keep learning.

With Umbraco Engage, A/B testing becomes something your whole team can do. It’s built into your CMS, built for marketers.

Want help getting started?

No complex setup. No third-party bloat. Just a smarter way to optimize your site — one test at a time.